There are many options available when choosing a method to estimate the necessary effort within agile IT projects.

In Data Vault 2.0 projects, we recommend estimating the effort by applying a Function Point Analysis (FPA). In this article, you will learn why FPA is a good choice and why you should consider using this method in your own Data Vault 2.0 projects.

GOOD OLD PLANNING POKER

Probably the best known method for estimating work in agile projects is Planning Poker. Within the process, so-called story points, based upon the Fibonacci sequence (0, 0.5, 1, 2, 3, 5, 8, 13, 20, 40 and 100), are used to estimate the effort of a given task.

To begin the process, the entire development team sits together as each member simultaneously assigns story points to each user story that they feel are appropriate. If the story points match, the final estimate is made. Alternatively, if a consensus cannot be reached the effort is discussed until a decision is made.

However, it’s important to note that this technique involves a lot of effort as a result of either too many tasks or too many team members.

So the question is: Does Planning Poker work for Data Vault projects?

The short answer is “it makes little sense”.

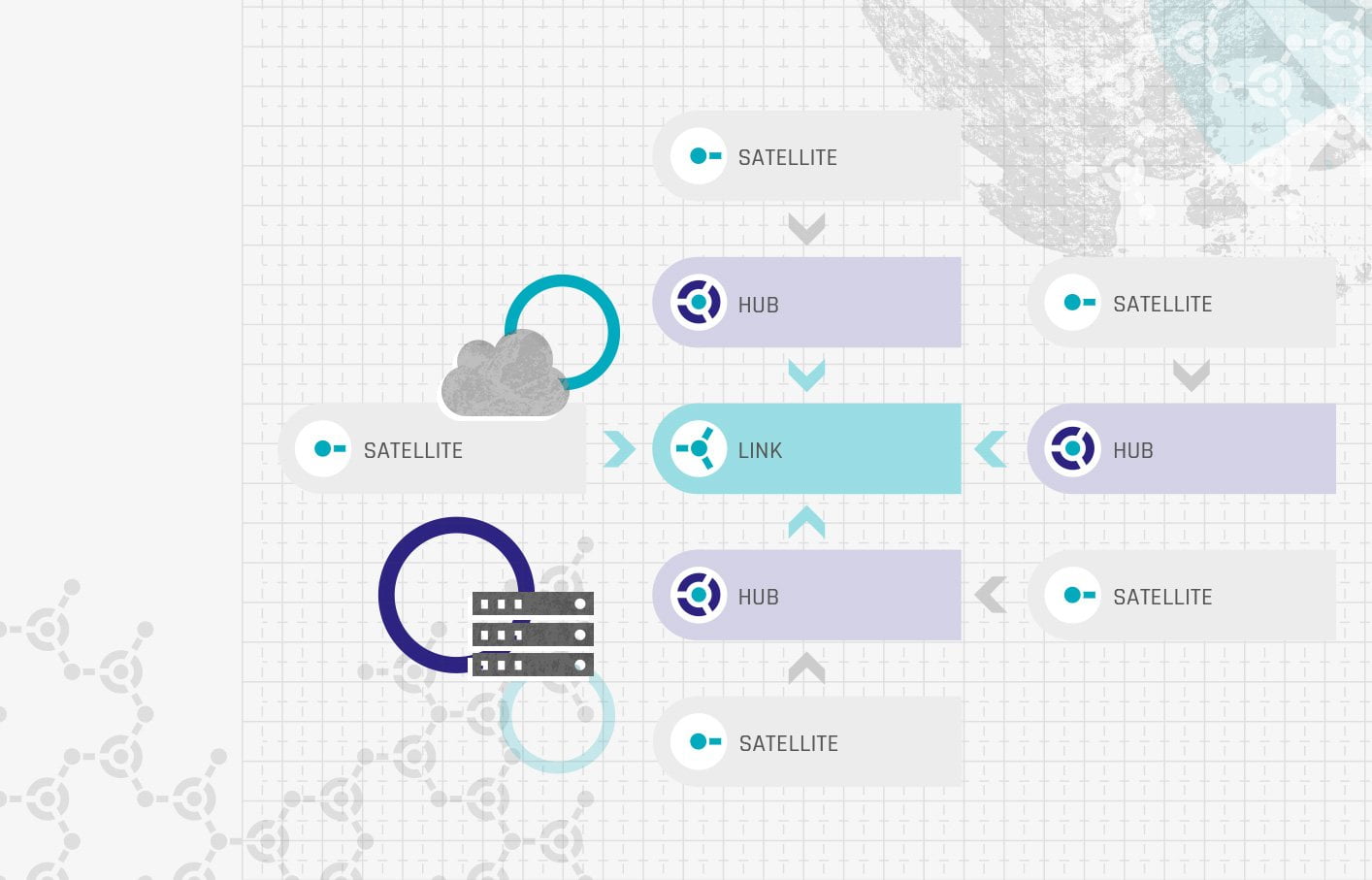

Since in Data Vault 2.0 the functional requirements are broken down into small items, there are numerous tasks that all have to be discussed and evaluated. In addition, the subtasks in Data Vault 2.0 are standardized artifacts, such as Hub, Link and Satellite. In principle, these artifacts always represent the same effort.

Why does Function Point Analysis suit it so well?

This is where FPA comes into play and the reason why it is widely used in agile software projects.

The idea is that software consists of the following characteristics, which represent the Function Point Types:

- External inputs (EI) → data entering the system

- External outputs (EO), external inquiries EQ → data leaving the system one way or another

- Internal logical files (ILF) → data manufactured and stored within the system

- External interface files (EIF) → data maintained outside the system but necessary to perform the task

With FPA, you break the functionality into smaller items for better analysis. As mentioned, this is already done in Data Vault 2.0 projects due to the standardized artifacts. This is the reason why FPA is very suitable for Data Vault projects.

How to apply FPA in Data Vault 2.0

Hence, to make good use of FPA in the Data Vault 2.0 methodology, the functional characteristics of software, external inputs, external outputs, external inquiries, internal logical files, and external interface files, must be adapted to reflect Data Vault projects. The following functional characteristics of data warehouses built with Data Vault are defined as:

- Stage load (EI)

- Hub load (ILF)

- Link load (ILF)

- Satellite load (ILF)

- Dimension load (ILF)

- Fact load (ILF)

- Report build (EO)

Please note that there are also other functional components that can be defined, like Business Vault entities, Point-in-time tables and so on.

Once you have defined these components, you should create a table that maps them to function points. Function points are used to quantify the amount of business functionality an element provides to a user. In general, it is recommended to add a complexity factor first:

| Complexity Factor | Person Hours per Function Point |

| Easy | 0.1 |

| Moderate | 0.2 |

| Difficult | 0.7 |

Then use the complexity factors and the assigned function points per component to calculate the estimated hours needed to add the respective functionality. Here is a short example of how the mapping table could look like for you:

| Component | Complexity Factor | Estimated Function Points | Estimated Total Hours |

| Hub Load | Easy | 2 | 0.2 |

| Dimension Load | Difficult | 3 | 2.1 |

| Report Build | Difficult | 5 | 3.5 |

The goal of estimation is to standardize the development of operational information systems by making the effort more expectable. This is due to the fact that when you use a systematic approach to estimate the needed effort to add components, it is possible to compare the estimated values with the actual values once the functionality is delivered. When both values are compared, your team can learn from these previous estimates and improve their future estimates by adjusting the function points per component. Also, keep in mind that your developers will gain experience over time or might lose experience due to replacement.

Conclusion

I hope this first glimpse into FPA helps you understand the basic value it can provide your team in Data Vault 2.0 projects. You can have a more in-depth look at how to apply FPA in Data Vault 2.0 projects by reading the book “Building a Scalable Data Warehouse with Data Vault 2.0” by Michael Olschimke and Dan Lindstedt.

Feel free to share this blog post to your colleagues and make sure to leave a comment on how your project estimates effort!

- by Simon Kutsche (Scalefree)

Get Updates and Support

Please send inquiries and feature requests to [email protected].

For Data Vault training and on-site training inquiries, please contact [email protected] or register at www.scalefree.com.

To support the creation of Visual Data Vault drawings in Microsoft Visio, a stencil is implemented that can be used to draw Data Vault models. The stencil is available at www.visualdatavault.com.

Newsletter

New Data Vault insights every month